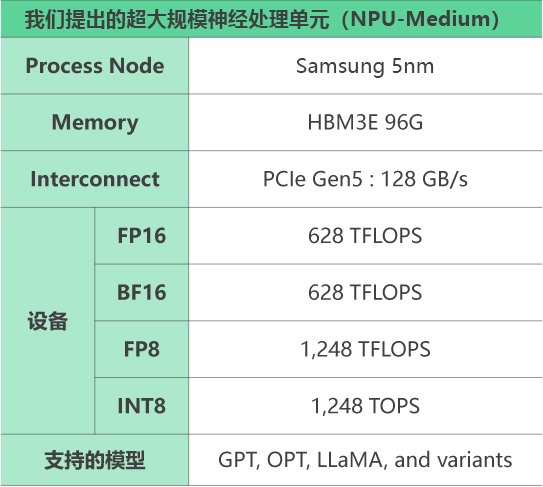

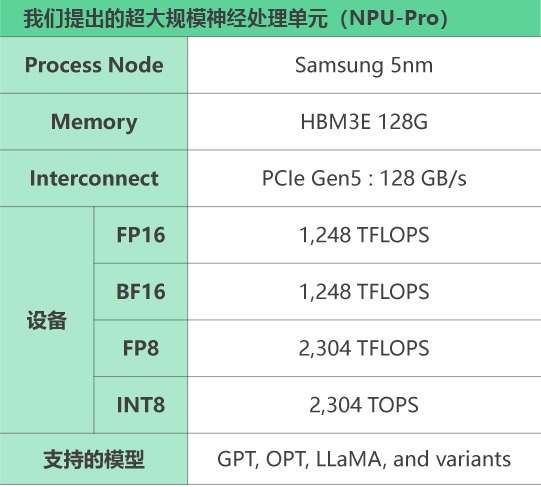

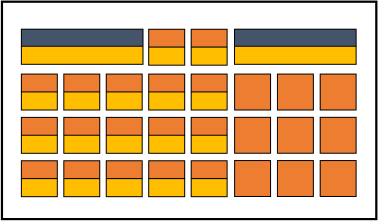

Suggested Architecture

*The process may be adjusted (5nm or 4nm) based on the service situation of the wafer fab

We will use Samsung's 5-nanometer process * and their HBM3E memory to design artificial intelligence chips for generative AI (such as GPT-3, LLaMA),

This is a multi billion parameter, small batch, and memory intensive workload.

*The process may be adjusted (5nm or 4nm) based on the service situation of the wafer fab

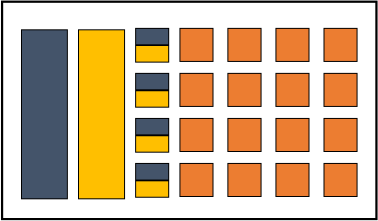

In order to design AI semiconductors for servers more effectively, NPU specialized structures should be used instead of GPUs, and NPU will become mainstream in the future.*Many companies such as Google, Microsoft, Tesla, etc. are manufacturing dedic

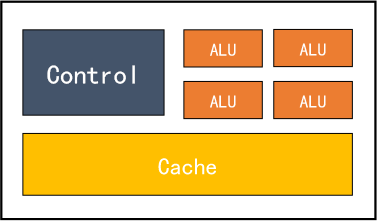

CPU Architecture General Architecture

GPU Architecture Parallel Optimization Architecture

NPU Architecture Optimization Architecture For Memory Operation Mode

Highly optimized and flexible processor architecture, coupled with world leading HBM technology, performs excellently in generative AI workloads with performance (>30%)

The cost-effectiveness (>2 times) and power efficiency (>3 times) are both superior to the existing H100.